Ethical, Transparent, and Inclusive: this is how Medicus applies international standards in AI development.

Article written by Tarek Turk, Medical Team Lead at Medicus AI.

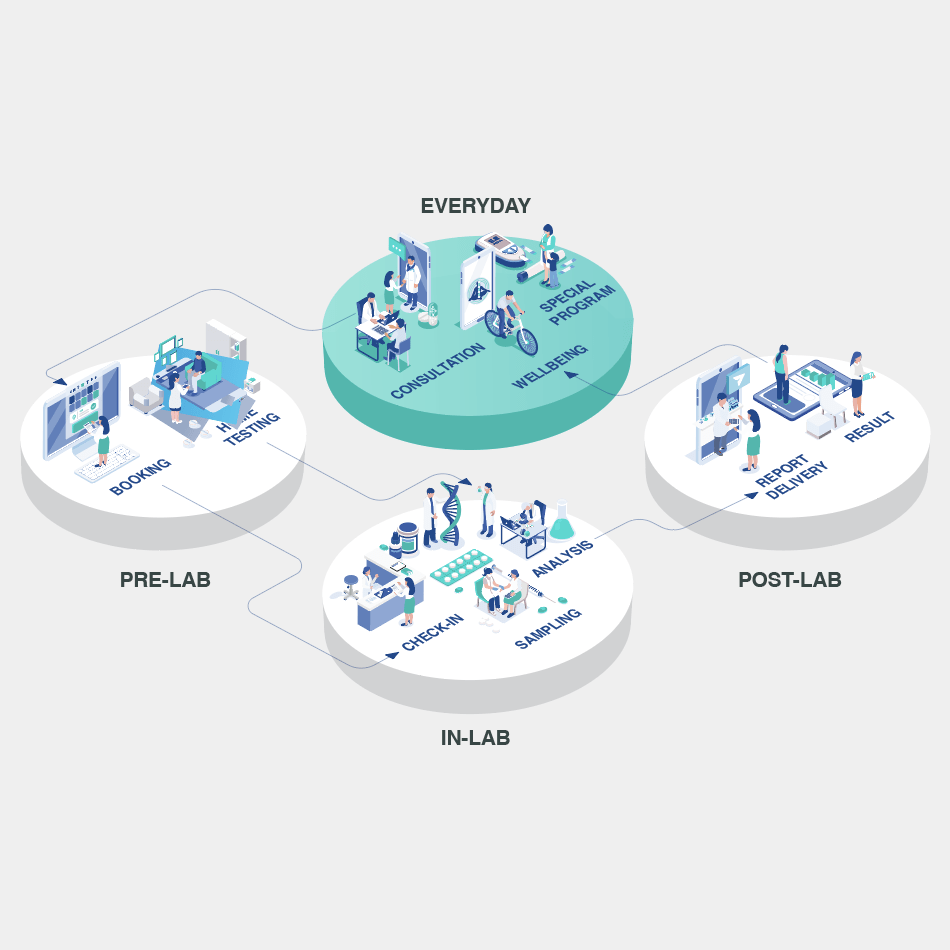

Artificial Intelligence (AI) has recently emerged as a valuable tool for healthcare. Interpreting big data and supporting patients’ and clinicians’ decision-making are some of the few challenges that can now be overcome with AI. Misuse of AI, however, can impose digital and physical risks. These risks have raised concerns worldwide. The World Health Organization (WHO) has addressed these concerns by issuing guidelines for developing AI products.

At MedicusAI, we have always believed that AI can contribute to better health. Even before the WHO guidelines, Medicus had always prioritized the safe and ethical use of AI in its operations. Internal discussions on best practices and recommendations on safety and ethics have always been the norm. Our aim is to build a proper AI strategy that can best serve our communities and clients and result in products that are used on a global scale. When the WHO guidelines were published, a deep internal assessment proved that we are on the right track as the following items exhibit.

Item 1: “Humans should remain in control of healthcare systems and medical decisions”.

AI systems lacking human judgement increase the risk of harm (e.g. over diagnosing cancer). Dependence on AI could also reduce the health workforce’s ability to further evolve. AI is a tool to support humans, not replace them. Our products are regularly re-visited by experts and the smart solutions we develop promote human verification prior to publication.

Item 2: “AI products should be required to meet standards for safety, accuracy and efficacy within well-defined use cases”.

A “Do No Harm” approach is the only approach, we believe. AI products with low accuracy profiles can harm their users. For instance, missing a malignant mass on a CT scan, ordering the wrong tests or allocating a hospital bed to the wrong patient thinking they would benefit more can lead to catastrophic consequences. This usually happens when a system is not based on evidence-based health information, when it lacks proper testing and validation on real-life scenarios, or when the human factor is eliminated before achieving high safety and accuracy. Our rigorous validation process precedes any product launch. We scrutinize our work to tackle systematic or random errors by testing, developing, and re-testing.

Item 3: “AI developers should be transparent about how products are designed and function before they’re used”.

Transparency and reproducibility of research methods are key for conducting scientific endeavors, and contributes to better health outcomes in the world of health products and services. As we base the development of our AI algorithms, and all other products for that matter, on evidence-based health information, we keep a detailed methodology of the development and validation of each piece of product. Our methodology is used internally, for clients, and for scientific publications, when appropriate. Our transparent policies with our stakeholders are fueled by their valuable feedback which, without doubt, improves our work.

Item 4: “Healthcare businesses that rely on AI should ensure they are used under appropriate conditions by trained personnel”.

“Give your bread dough to a baker, even if they eat half of it” — an Arabic saying that highlights the importance of experts, and guides our resource mobilization strategy. Each Medicus-developed product is led by a specialized team of experts in health, management, technology and business.

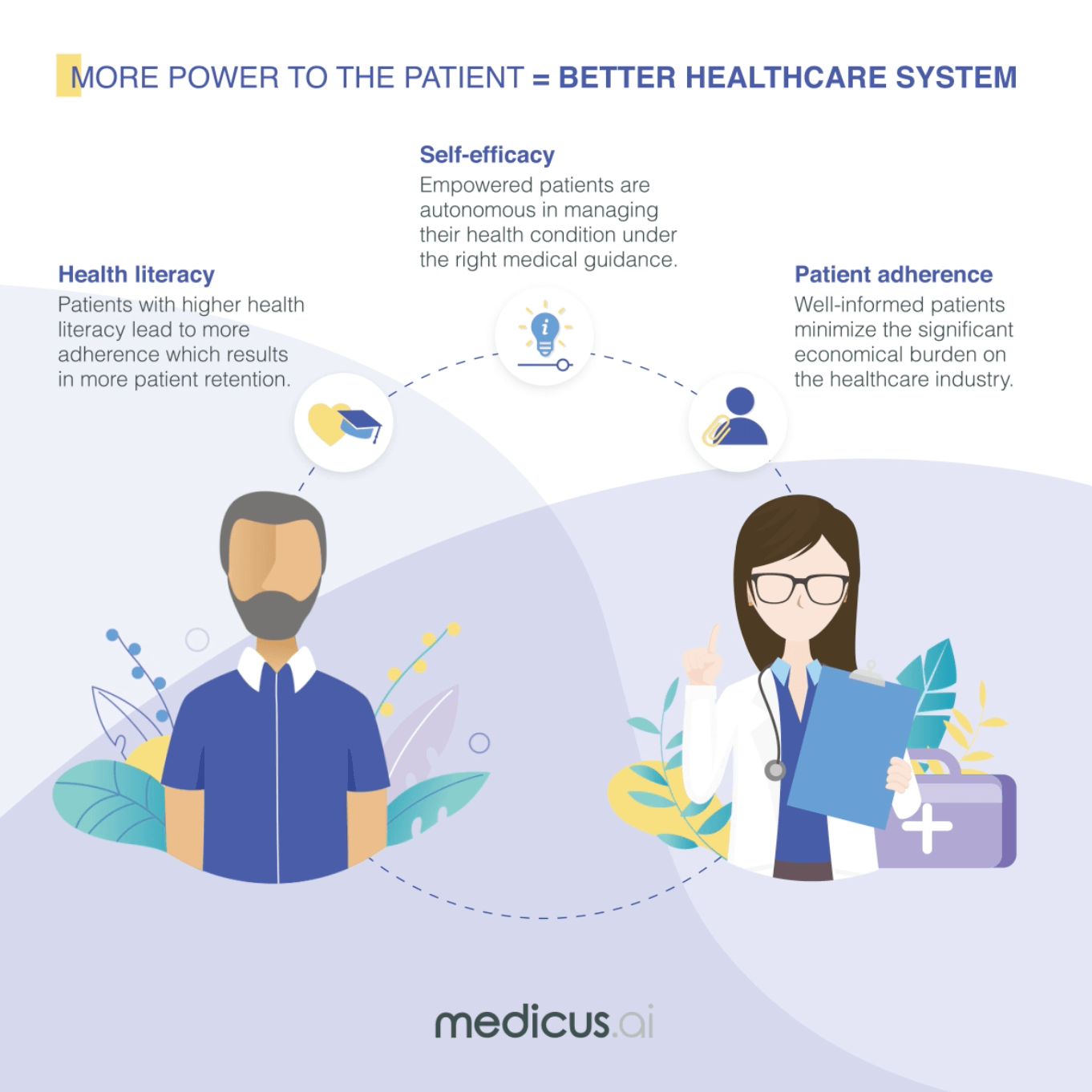

Item 5: “AI must be designed to encourage inclusiveness and equality”.

Inclusiveness and equality are core entities of any health act. Ignoring these principles in AI development could affect thousands of individuals. Running learning models on systems lacking inclusiveness could harm communities. At Medicus, we take pride in factoring in regions, ethnicities, gender, and anything that can affect our smart outcomes when developing our systems.

Item 6: “The performance of AI applications should be continuously and transparently assessed during actual use”.

Analyzing products after launching to larger populations is crucial. It helps in detecting issues that are impossible to identify without large-scale use. AI products need rigorous maintenance, especially for catching outliers that automated learning models may miss. We take several measures to ensure high-yield results, including recurrent internal audits and comparative analyses of data and client feedback.

We are thrilled to see our AI models adhere to the WHO guidelines and will continue to develop our products in line with international ethical standards. Standardized guidelines bring value to AI use in health, result in safer and superior products, and ultimately, better promote the wellbeing of individuals, which is something we continuously devote our time and efforts for.